Best Data Engineering Courses Online In Chennai provides an intensive learning experience for professionals aiming to build expertise in data engineering, focusing on designing, building, and managing data infrastructure that supports analytics and business decision-making. This course is perfect for both beginners and experienced IT professionals looking to specialize in data engineering.

The program begins with an introduction to data engineering fundamentals, including data architecture, data modeling, and the end-to-end lifecycle of data. You’ll learn how to handle structured and unstructured data, develop a deep understanding of ETL (Extract, Transform, Load) pipelines, and learn how to automate and optimize data workflows using tools like Apache Hadoop, Spark, and Kafka. The training also includes comprehensive modules on working with cloud platforms such as AWS, Google Cloud, and Azure to build scalable and secure data solutions.

A significant focus of the course is on SQL, NoSQL databases, and Big Data technologies, equipping you with the skills to work with large datasets and complex queries. You will also learn data warehousing techniques and how to implement data lakes for handling vast amounts of data.

Additionally, you’ll gain practical experience with real-world projects, allowing you to build, manage, and monitor data pipelines. You’ll also learn how to implement data governance and ensure data security, which is critical in today’s data-driven environments.

The Best Data Engineering Courses Online In Chennai ensures that you are well-equipped with the technical skills to design and implement efficient, scalable data solutions. By becoming proficient in these areas, you will become an integral part of any organization’s data team as a qualified Data Engineer, ready to tackle real-world data challenges.

Hadoop and Spark Course Content

- What is Big data?

- Sources of Big data

- Categories of Big data

- Characteristics of Big data

- Use-cases of Big data

- Traditional RDBMS vs Hadoop

- What is Hadoop?

- History of Hadoop

- Understanding Hadoop Architecture

- Fundamental of HDFS (Blocks, Name Node, Data Node, Secondary Name Node)

- Block Placement &Rack Awareness

- HDFS Read/Write

- Drawback with 1.X Hadoop

- Introduction to 2.X Hadoop

- High Availability

- Making/creating directories

- Removing/deleting directories

- Print working directory

- Change directory

- Manual pages

- Help

- Vi editor

- Creating empty files

- Creating file contents

- Copying file

- Renaming files

- Removing files

- Moving files

- Listing files and directories

- Displaying file contents

- Understanding Hadoop configuration files

- Hadoop Components- HDFS, MapReduce

- Overview of Hadoop Processes

- Overview of Hadoop Distributed File System

- The building blocks of Hadoop

- Hands-On Exercise: Using HDFS commands

Map Reduce 1(MRv1)

- Map Reduce Introduction

- How Map Reduce works?

- Communication between Job Tracker and Task Tracker

- Anatomy of a Map Reduce Job Submission

MapReduce-2(YARN)

- Limitations of Current Architecture

- YARN Architecture

- Node Manager & Resource Manager

- What is hive?

- Why hive?

- What hive is not?

- Meta store DB in hive

- Architecture of hive

- Internal table

- External table

- Hive operations

- Static Partition

- Dynamic Partition

- Bucketing

- Bucketing with sorting

- File formats

- Hive performance tuning

- What is Sqoop?

- Architecture of Sqoop

- Listing databases

- Listing tables

- Different ways of setting the password

- Using options file

- Sqoop eval

- Sqoop import into target directory

- Sqoop import into warehouse directory

- Setting the number of mappers

- Life cycle of Sqoop import

- Split-by clause

- Importing all tables

- Import into hive tables

- Export from hive tables

- Setting number of mappers during the export

- What is Python?

- Why Python?

- Installation of python

- Conditions

- Loops

- Break statement

- Continue statement

- Range functions

- Command line arguments

- String Object Basics

- String Methods

- Splitting and Joining Strings

- String format functions

- List Object Basics

- List Methods

- Tuples

- Sets

- Frozen sets

- Dictionary

- Iterators

- Generators

- Decorators

- List Set Dictionary comprehensions

- Creating Classes and Objects

- Inheritance

- Multiple Inheritance

- Working with files

- Reading and Writing files

- Using Standard Modules

- Creating custom modules

- Exceptions Handling with Try-except

- Finally, in exception handling

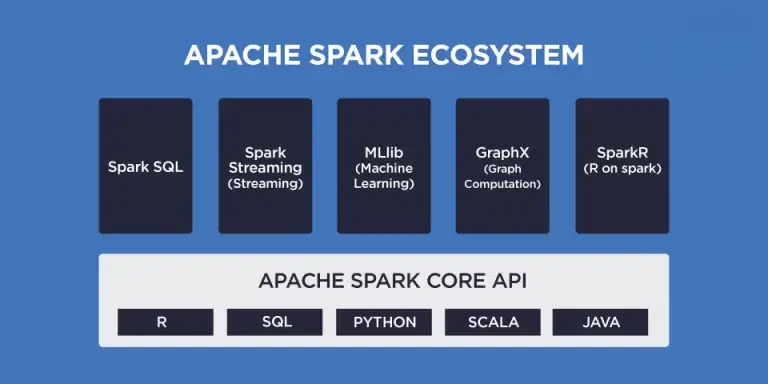

- What is Apache Spark & Why Spark?

- Spark History

- Unification in Spark

- Spark ecosystem Vs Hadoop

- Spark with Hadoop

- Introduction to Spark’s Python and Scala Shells

- Spark Standalone Cluster Architecture and its application flow

- RDD Basics and its characteristics, Creating RDDs

- RDD Operations

- Transformations

- Actions

- RDD Types

- Lazy Evaluation

- Persistence (Caching)

- Module-Advanced spark programming

- Accumulators and Fault Tolerance

- Broadcast Variables

- Custom Partitioning

- Dealing with different file formats

- Hadoop Input and Output Formats

- Connecting to diverse Data Sources

- Module-Spark SQL

- Linking with Spark SQL

- Initializing Spark SQL

- Data Frames &Caching

- Case Classes, Inferred Schema

- Loading and Saving Data

- Apache Hive

- Data Sources/Parquet

- JSON

- Spark SQL User Defined Functions (UDFs)

- Getting started with Kafka

- Understanding Kafka Producer and Consumer APIs

- Deep dive into producer and consumer APIs

- Ingesting Web Server logs into Kafka

- Getting started with Spark Streaming

- Getting started with HBASE

- Integrating Kafka-Spark Streaming-HBASE

- Introduction

- Sign up for AWS account

- Setup Cygwin on Windows

- Quick Preview of Cygwin

- Understand Pricing

- Create first EC2 Instance

- Connecting to EC2 Instance

- Understanding EC2 dashboard left menu

- Different EC2 Instance states

- Describing EC2 Instance

- Using elastic IPs to connect to EC2 Instance

- Using security groups to provide security to EC2 Instance

- Understanding the concept of bastion server

- Terminating EC2 Instance and relieving all the resources

- Create security credentials for AWS account

- Setting up AWS CLI in Windows

- Creating s3 bucket

- Deleting root access keys

- Enable MFA for root account

- Introduction to IAM users and customizing sign in link

- Create first IAM user

- Create group and add user

- Configure IAM password policy

- Understanding IAM best practices

- AWS managed policies and creating custom policies

- Assign policy to entities (user and/or group)

- Creating role for EC2 trusted entity with permissions on s3

- Assigning role to EC2 instance

- Introduction to EMR

- EMR concepts

- Pre-requisites before setting up EMR cluster

- Setting up data sets

- Setup EMR with Spark cluster using quick options

- Connecting to EMR cluster

- Submitting spark job on EMR cluster

- Validating the results

- Terminating EMR Cluster

- What is Airflow?

- Airflow terminology

- Why Airflow?

- What is Airflow Scheduler?

- What is DAG RUN?

- Airflow Operators

- Create first DAG/Workflow

- Run Pyspark job with Airflow

- 3 Real-Time Projects

- Deployment on multiple platforms

- Discussion on project explanation in interview

- Data engineer roles and responsibilities

- Data engineer day to day work

- One to One resume Discussion with project, technology, and Experience.

- Mock interview for every student

- Real time Interview Questions

Moreover, the Best Data Engineering Courses Online In Chennai offer a wide range of learning formats, including live online classes, pre-recorded lectures, and self-paced learning modules, ensuring that both full-time professionals and students can find a study plan that suits their schedule. This flexibility is crucial for those who want to upskill without compromising their current job or academic commitments.

The course also provides access to industry experts and mentorship opportunities, allowing students to receive guidance from professionals actively working in the field of data engineering. This mentorship ensures that students not only gain theoretical knowledge but also understand the practical aspects of data infrastructure, cloud technologies, and Big Data ecosystems.

Another benefit of enrolling in the Best Data Engineering Courses Online In Chennai is the career support provided, including resume building, mock interviews, and job placement assistance. The program aims to equip learners with the confidence and expertise to apply for high-demand roles in data engineering across various sectors.

By completing the Best Data Engineering Courses Online In Chennai, you will also gain exposure to cutting-edge technologies and emerging trends in the data engineering space, ensuring that your skills remain relevant and up-to-date. Whether you are looking to transition into a new career or advance in your current role, this course provides the comprehensive training needed to excel.

Take the leap and enroll in the Best Data Engineering Courses Online In Chennai today. With top-notch instruction, hands-on experience, and robust career support, this course positions you to become a highly skilled Data Engineer and make a valuable contribution to your organization’s data-driven decision-making processes.

Other Courses:

- Azure Data Engineer

- Revised Data Engineering and more..

Frequently Asked Questions FAQs

Q1. What topics are covered in the Best Data Engineering Courses Online In Chennai?

The course covers topics such as data architecture, data modeling, ETL pipelines, cloud platforms (AWS, Google Cloud, Azure), SQL, NoSQL databases, Big Data technologies, and data warehousing techniques.

Q2. Who should enroll in the Best Data Engineering Courses Online In Chennai?

This course is designed for both beginners looking to start their career in data engineering and experienced IT professionals who want to specialize in this field.

Q3. What practical skills will I gain from this course?

You will gain practical skills in building and managing data pipelines, implementing data governance, ensuring data security, and optimizing data workflows.

Q4. Is there hands-on experience involved in the training?

Yes, the course includes real-world projects that provide hands-on experience in managing data infrastructure and working with data pipelines.

Q5. What tools and technologies will I learn?

You will learn to use various tools and technologies including Apache Hadoop, Spark, Kafka, and cloud platforms like AWS, Google Cloud, and Azure.